CSV vs. Parquet vs. AVRO: Pick the Optimal Format for Your Data Pipeline

Not all file formats are equal. Learn when to use CSV, Parquet, or AVRO for the best balance of performance, scalability, and storage efficiency.

Together with

Product for Engineers

Product for Engineers is PostHog’s newsletter dedicated to helping engineers and founders improve their product skills. Subscribe for free to get curated advice on building great products, lessons (and mistakes) they’ve learned building PostHog, and deep dives on the culture and strategies of top startups.

Greetings, curious reader,

Building a data lake from scratch can feel overwhelming. So many decisions and so many trade-offs.

Which file format should you use?

Sounds simple, right? Wrong!

Most data engineers default to what they know. Some copy what they've seen. The best ones research before making a choice.

CSV, Parquet, and AVRO are the top contenders. Each has strengths. Each has weaknesses. Pick the wrong one and risk slow queries, bloated storage, or painful integrations.

Bad file format choices waste time and money. Imagine running massive queries on CSV files—your costs soar, and performance tanks. Or you choose Parquet when you need real-time streaming, and nothing works as expected.

Today, you and I will break down these formats: how they work when to use them, and when to avoid them.

Want to play with the code? Check the GitHub repository.

📄 CSV: The Simplicity Tzar

CSV (Comma-Separated Values) is the simplest and most widely used file format in data engineering. It stores data as plain text, where each line represents a record, and columns are separated by a delimiter—usually a comma (,).

CSV files are human-readable, easy to edit, and supported by nearly every tool. You can open them in Notepad, VS Code, or even Excel. This makes CSV the default choice for data exchange, but it also comes with limitations.

⚙️ How CSV Works

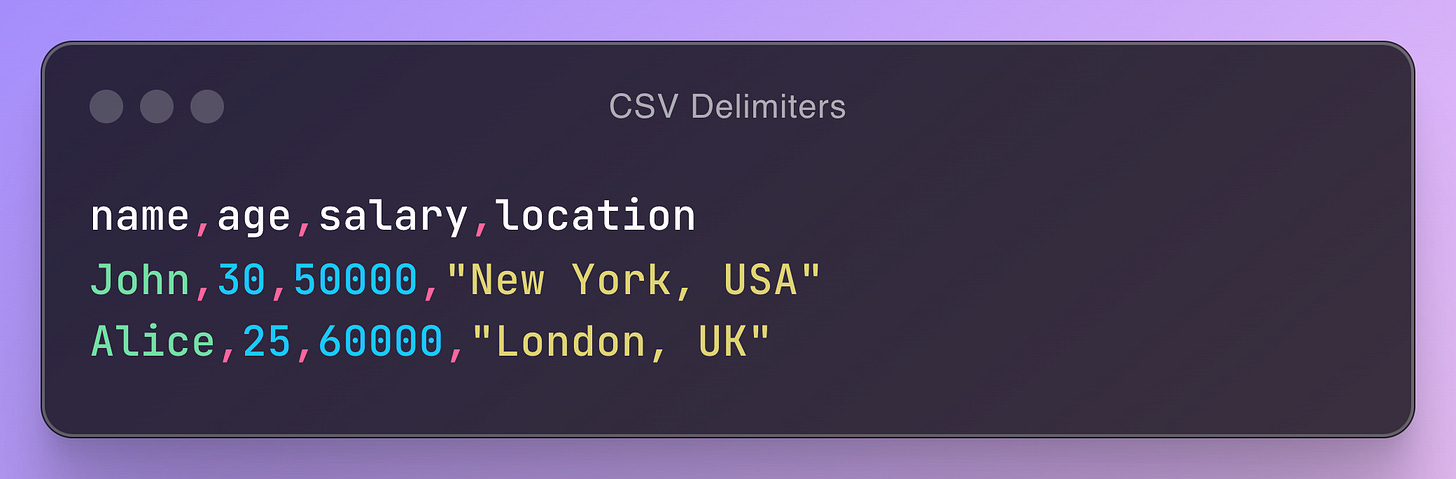

At its core, CSV is just structured text. Let’s break down a simple example:

Each row is a separate record, and values are separated by commas. The first row often contains column names (headers), which act as a schema. However, unlike formats like Parquet or AVRO, CSV does not enforce schema consistency.

✂️ Delimiters: More Than Just Commas

Although CSV stands for Comma-Separated Values, commas aren’t the only delimiters used:

Semicolon (

;): Common in countries where commas are used as decimal points.Tab (

\t): Often used in TSV (Tab-Separated Values) files.Pipe (

|): Found in log files or when data contains commas.

If the data itself contains the delimiter, values must be enclosed in quotes:

🐍 How To Use CSV with Python

Python includes built-in support for CSV through the csv module. No external libraries are needed.

💾 Writing to a CSV File

This code creates a CSV file with three records. The newline="" argument prevents extra blank lines on Windows.

🔍 Reading from a CSV File

CSV files do not store explicit data types. Everything is read as a string, so you must manually convert numeric values. It requires some guesswork when it comes to data types.

👍 Upsides of CSV

👀 Upside #1: Human-Readable and Easy to Edit

CSV files are just plain text. You can open and modify them using any text editor or spreadsheet tool.

There’s no need for specialised software, making CSV ideal for manual data entry, quick debugging, and ad-hoc data analysis.

Let’s take for an example a data analyst receives a report in CSV format and needs to tweak a few values. They can open it in Excel, edit it, and save it—no coding required.

🌍 Upside #2: Universal Compatibility

CSV is supported by almost every software that handles data. You can load CSVs into databases, spreadsheets, and programming languages like Python, R, and Java without conversion.

A team working with SQL, Pandas, and Excel can easily share CSV files without format compatibility issues.

📦 Upside #3: Ideal for Small Datasets and Data Sharing

CSV works well for small to medium-sized datasets where performance isn’t a concern. It’s also widely used for exporting and importing data between systems.

Many SaaS application allow users to export reports in CSV format so they can analyse data in Excel.

👎 The Downsides of CSV

🔡 Downside #1: No Data Types

CSV files do not store data types. Numbers, dates, and booleans are treated as plain text. This can lead to issues when reading data into programming languages.

When loaded into Python, "30", "50000", and "TRUE" are all strings, not integers or booleans. You must manually convert them:

Without type enforcement, errors can easily creep into data pipelines.

🏋️ Downside #2: Large File Sizes

CSV files store data inefficiently because they store values in every row and do not support compression.

Example: A CSV file with 1 million rows containing timestamps repeats the date format in every row. In contrast, Parquet compresses and optimises storage.

Storing large CSV files in a data lake increases storage costs. Reading large CSV files takes longer, affecting performance.

🐢 Downside #3: Slow Read and Write Performance

CSV is not optimised for big data processing. Since it’s a row-based format with no indexing, reading large files requires scanning everything from top to bottom.

Example:

Small dataset: Reading a 10MB CSV file in Pandas is fast.

Large dataset: Reading a 10GB CSV file is slow.

Performance issues arise when handling CSV at scale, making it unsuitable for data lakes and large ETL jobs.

📊 Parquet: The Columnar Powerhouse

Parquet is a binary file format designed for efficient storage and fast queries. Unlike traditional row-based formats, Parquet organises data by column. This makes it ideal for large volumes, analytics, and cloud storage.

Parquet files also embed schema and metadata, ensuring that data types and structure are preserved. This self-describing nature reduces errors and improves data integrity. Parquet is widely used in data lakes, cloud storage (S3, GCS, ABS), and distributed computing frameworks (Spark, Hive, Presto).

⚙️ How Parquet Works

Parquet stores data in a columnar format, meaning it groups values by column rather than row. This improves query performance and allows for better compression.

🏛️ Columnar Storage

Instead of storing data row-by-row, Parquet stores each column separately:

This means queries that require only specific columns can read them directly without scanning unnecessary data.

Also, each Parquet file includes metadata that describes:

Column names

Data types (int, float, string, boolean)

Compression settings

File statistics (e.g., min/max values)

This ensures consistency across different systems and prevents data corruption.

🐍 How To Use Parquet with Python

Python doesn’t support Parquet natively, so external libraries like pyarrow or fastparquet are required.

💾 Writing to a Parquet File

Parquet automatically detects data types, reducing the need for manual conversions.

🔍 Reading from a Parquet File

Since Parquet files store schema and metadata, reading them is straightforward and doesn’t require defining column types manually.

👍 Upsides of Parquet

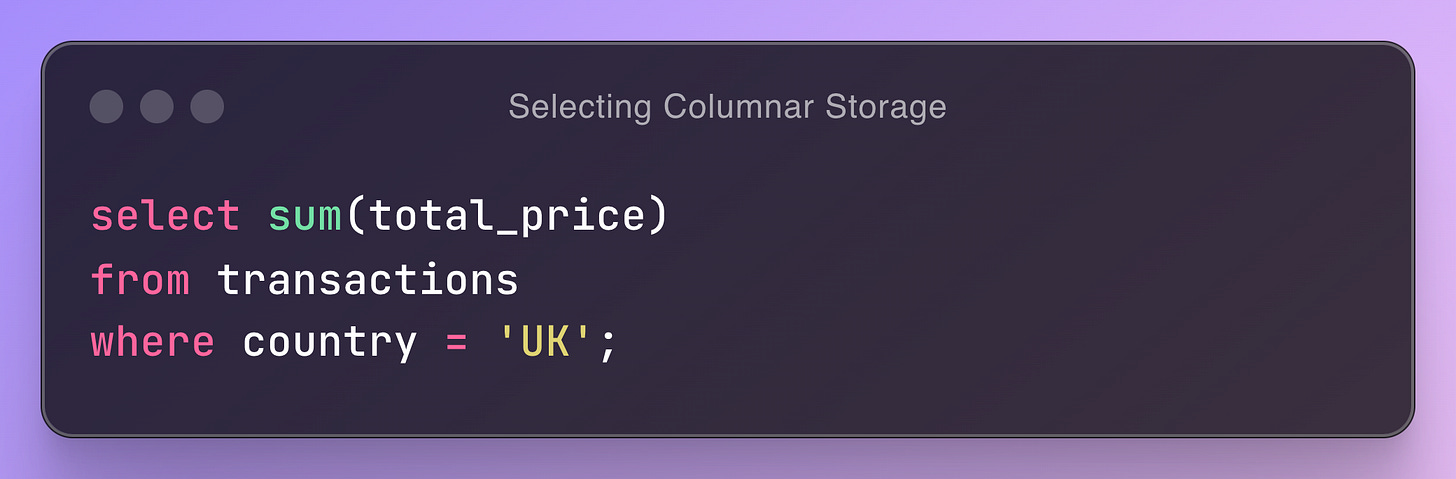

🗄️ Upside #1: Columnar Storage

Parquet stores data by column, making analytical queries much faster. Instead of scanning entire rows, query engines read only the columns they need.

Example:

A data team is analysing 1 billion sales transactions stored in Parquet. They run the following query:

Since Parquet stores columns separately, the query engine only reads the total_price and country columns—ignoring all other fields. This results in a 10x speed improvement compared to a row-based format.

🗜️ Upside #2: Built-in Compression

Parquet supports advanced compression algorithms such as:

Snappy: Fast and widely used

Gzip: High compression ratio

ZSTD: Best for high-performance applications

Compression is highly effective because similar values are grouped in columns, significantly reducing file size.

🛠️ Upside #3: Schema Enforcement

Parquet files embed schema within the file, ensuring:

Column names remain consistent

Data types are enforced

Null values are handled correctly

This prevents data corruption and reduces errors in data pipelines.

For example, if a dataset expects an integer for age, inserting a string like "thirty" would trigger an error rather than silently corrupting the data.

👎 The Downsides of Parquet

🔐 Downside #1: Not Human-Readable

Parquet files are binary, meaning they cannot be opened in a text editor. You need specialised tools such as:

Python (

pyarrow,fastparquet)Spark (

spark.read.parquet)

A text-based format might be more practical for quick manual edits or debugging.

🐢 Downside #2: Slower for Row-Based Operations

Parquet is optimised for column-based analytics, but it is less efficient for row-based operations, such as:

Frequent inserts

Updates to individual records

Transactional workloads

A row-based format might be more suitable if data is frequently updated or new rows are continuously appended.

🐘 Downside #3: Performance Overhead for Small Files

Parquet is optimised for large datasets but performs poorly with many small files. Each Parquet file contains metadata, compression settings, and row groups, which adds overhead.

If your dataset consists of many small Parquet files, queries can slow down because engines must process metadata for each file separately.

For example, having thousands of small Parquet files can increase query latency in cloud storage or distributed systems. Compaction strategies (merging small files into larger ones) are often necessary.

If your workload involves frequent small file writes, another format like AVRO might be better.

⚡ AVRO: The Streaming Specialist

AVRO is a binary row-based file format for efficient data serialisation and schema evolution. Unlike Parquet, which stores data column-by-column, AVRO organises data row-by-row, making it ideal for streaming, messaging systems, and transactional workloads.

One of AVRO’s standout features is schema evolution. It embeds its schema inside the file in JSON format. This allows applications to read old and new versions of data without breaking compatibility.

AVRO is commonly used in Apache Kafka and distributed processing frameworks.

⚙️ How AVRO Works

AVRO stores data in a binary format while keeping the schema separately in JSON. This allows data consumers to read AVRO files without needing predefined schemas.

Each AVRO file consists of:

Header: Contains metadata, including the schema in JSON format.

Data: Stored in a binary, compact format for fast serialisation.

This structure ensures that schema changes do not break existing data pipelines over time.

This schema defines three fields (name, age, salary) and their respective data types.

🐍 How To Use AVRO with Python

To work with AVRO files in Python, you need the avro library.

💾 Writing to an AVRO File

Unlike other formats, AVRO requires defining a schema before writing data. This ensures consistent data types and prevents schema drift.

🔍 Reading from an AVRO File

Since AVRO files store both data and schema, they are self-describing and easy to interpret across different applications.

👍 Upsides of Avro

🔄 Upside #1: Schema Evolution

AVRO allows schemas to evolve over time. You can add, remove, or modify fields without breaking compatibility. This makes it well-suited for long-term data storage, where requirements may change over time.

For example, suppose a system initially stores just name and age. Later, a salary field is added. Older records that don’t have salary remain readable, while new records contain the extra field. This flexibility is crucial for growing datasets and versioned data models.

Many data formats require strict schema enforcement, making changes difficult. AVRO's built-in support for schema evolution removes this friction and allows for a more dynamic approach to data management.

AVRO allows schemas to evolve over time. You can add, remove, or modify fields without breaking compatibility. This makes it well-suited for long-term data storage, where requirements may change over time.

For example, suppose a system initially stores just name and age. Later, a salary field is added.

Older records that don’t have salary remain readable, while new records contain the extra field. This flexibility is crucial for growing datasets and versioned data models.

Many data formats require strict schema enforcement, making changes difficult. AVRO's built-in support for schema evolution removes this friction and allows for a more dynamic approach to data management.

🗜️ Upside #2: Compact Binary Format

AVRO is smaller and faster than text-based formats. It minimises file size while allowing quick serialisation and deserialisation. This is essential for high-speed data streams and real-time applications.

Because AVRO stores data in a compact binary format, it reduces network bandwidth when transmitting messages between systems. This makes it a top choice for Apache Kafka, where efficiency matters.

A typical Kafka pipeline can process millions of AVRO messages per second without significant overhead.

The ability to store structured data in a small footprint also benefits cloud storage and distributed databases. Less storage means lower costs and faster reads mean better performance.

🏷️ Upside #3: Self-Describing Format

Since AVRO stores schema inside the file, applications can read AVRO files without predefined schema definitions. This simplifies data exchange across different systems.

For example, a data producer in one team can send an AVRO file to a consumer in another team without prior schema coordination. The consumer reads the schema embedded inside the file and processes the data accordingly.

AVRO is particularly useful in loosely coupled architectures like microservices and data lakes.

Unlike formats that rely on external schema registries, AVRO ensures data remains self-contained and portable.

👎 Downsides of AVRO

🔐 Downside #1: Not Human-Readable

AVRO files are stored in binary format, meaning they cannot be opened in a text editor. Unlike JSON or CSV, you can’t manually inspect AVRO data without specialised tools.

This makes debugging more difficult. Engineers often need tools like Apache AVRO CLI, Python (avro), or Java (Apache Avro) to inspect or modify AVRO files. If quick manual edits are required, another format might be more practical.

This limitation also affects data sharing. If analysts need to review raw data, AVRO may not be ideal. Instead, businesses often convert AVRO to JSON or CSV before sharing it with non-technical users.

🐢 Downside #2 Slower for Analytical Queries

AVRO is row-based, which makes it less efficient for analytical workloads. If a query needs to compute the average salary of all employees, AVRO must read every row to extract the salary field.

Columnar formats like Parquet are much faster for these queries because they store data by column. With Parquet, the query engine can scan only the salary column instead of reading the entire dataset.

AVRO is not the best choice for analytics workloads. It is more suited for streaming, transactional workloads, and log storage, where entire rows are frequently read and written.

🏗️ Downside #3: Schema Management Complexity

AVRO’s schema evolution is a powerful feature but requires careful management. Consumers must handle schema changes properly since the schema is embedded in the file. If different teams use different schema versions, compatibility issues can arise.

For example, the pipeline may break if a producer adds a new required field and a consumer isn't updated.

To avoid this, teams often use a schema registry (like Confluent Schema Registry for Kafka) to track versions and enforce compatibility rules. But this adds operational overhead—schema validation, versioning, and compatibility checks become part of the workflow.

Parquet might be a better fit if your data structure is stable and schema changes are rare. However, if schema flexibility is needed, be prepared to invest in schema governance.

Enjoyed this newsletter? Please show some love on LinkedIn or Bluesky or forward it to friends. It really helps!

🤔 Choosing the Right Option

✅ When to Use CSV

Small Datasets: CSV is easy to use if performance isn’t a concern.

Manual Data Entry and Editing: Great for self-service analytics, where users modify data in spreadsheets.

Simple Reporting: When exporting tabular data from SQL databases or applications.

🚫 When Not to Use CSV

Big Data Processing: Slow read/write speeds and large file sizes make it inefficient.

Schema Enforcement Needed: Without explicit data types, errors can occur in pipelines.

Frequent Updates: Since CSV files are plain text, modifying specific rows is slow and cumbersome.

✅ When to Use Parquet

Large Volume Processing: Works well in data lakes and distributed computing.

Analytical Queries: Optimised for aggregations and column-based queries.

Efficient Storage: Reduces file size and minimises storage costs.

🚫 When Not to Use Parquet

Frequent Row Updates: Slower for transactional operations.

Small-Scale Data Sharing: If data needs to be easily readable, another format might be better.

Small File Overhead: Parquet performs best with large files. Too many small Parquet files can slow down queries, requiring file compaction strategies.

✅ When to Use AVRO

Streaming and Messaging Systems: Works well with Kafka and event-driven pipelines.

Long-Term Data Storage: Schema evolution makes it ideal for changing data models.

Row-Based Processing: Great for transactional workloads and frequent inserts.

🚫 When Not to Use AVRO

Analytical Queries: Columnar formats like Parquet are better for big data analytics.

Human-Readable Data: AVRO is not ideal if data needs to be manually inspected.

Schema Complexity: Requires careful schema versioning and management.

💭 Final Thoughts

Parquet and AVRO were designed when data lakes were just storage layers. Now, platforms need versioning, schema evolution, and transactions.

Instead of replacing file formats, tools like Iceberg and Delta Lake extend them. They add features, but Parquet and AVRO still do the heavy lifting.

This raises a question: Are file formats evolving, or are we just managing them better?

The real challenge isn’t picking one format. It’s making them work together. Most pipelines use multiple formats, converting between them many times.

The future isn’t about new file formats. It’s about smarter systems that choose the best format for each workload. Maybe one day, file formats won’t matter. The system will decide for you.

🏁 Summary

Choosing between CSV, Parquet, and AVRO depends on how you store, process, and query your data. Each format has its own trade-offs, and picking the wrong one can lead to performance bottlenecks, high storage costs, or compatibility issues.

CSV is simple, human-readable, and widely supported, but it lacks schema enforcement, efficient storage, and fast queries.

Parquet is built for big data and analytics, offering columnar storage and compression, but it requires external tools and isn’t ideal for row-based updates.

AVRO is excellent for streaming and schema evolution, making it a strong choice for Kafka and event-driven architectures, but it isn’t optimised for analytical queries.

If you need quick exports or simple data exchange, go with CSV.

For analytical workloads and cloud storage, choose Parquet.

If schema evolution and fast serialisation are your priority, AVRO is the best fit.

Before making a final decision, consider query patterns, data growth, and long-term compatibility.

Until next time,

Yordan from Data Gibberish

🚀 What’s Next?

💬 Leave a Testimonial

Love what you’re reading? Share your thoughts to help new readers discover Data Gibberish. Your words mean a lot.

🤝 Join the Community

Weekly newsletters can only do so much! Join our exclusive Discord for deeper dives into software & data engineering, leadership, and the creator economy. Let’s level up together.

![import csv filename = "data.csv" with open(filename, mode="w", newline="") as file: writer = csv.writer(file) # Define the schema (headers) writer.writerow(["name", "age", "salary"]) # Write data rows writer.writerow(["John", 30, 50000]) writer.writerow(["Alice", 25, 60000]) writer.writerow(["Bob", 40, 70000]) import csv filename = "data.csv" with open(filename, mode="w", newline="") as file: writer = csv.writer(file) # Define the schema (headers) writer.writerow(["name", "age", "salary"]) # Write data rows writer.writerow(["John", 30, 50000]) writer.writerow(["Alice", 25, 60000]) writer.writerow(["Bob", 40, 70000])](https://substackcdn.com/image/fetch/$s_!xiIw!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2b105642-7f6b-4805-a6b1-b214dae0f571_2080x1672.png)

![# Convert age to integer age = int(row[1]) # Convert salary to float salary = float(row[2]) # Convert to boolean is_manager = row[3] == "TRUE" # Convert age to integer age = int(row[1]) # Convert salary to float salary = float(row[2]) # Convert to boolean is_manager = row[3] == "TRUE"](https://substackcdn.com/image/fetch/$s_!nxw2!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fbf8d07c6-eec6-4a7d-aa2e-da1e1d6504d9_2080x1132.png)

![Column: name → ["John", "Alice", "Bob"] Column: age → [30, 25, 40] Column: salary → [50000, 60000, 70000] Column: name → ["John", "Alice", "Bob"] Column: age → [30, 25, 40] Column: salary → [50000, 60000, 70000]](https://substackcdn.com/image/fetch/$s_!a9-O!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F33a24620-f7e9-4eb9-ae3f-7eb41bdc42b9_2080x684.png)

![import pyarrow.parquet as pq import pyarrow as pa filename = "data.parquet" # Create a table with columnar storage data = { "name": ["John", "Alice", "Bob"], "age": [30, 25, 40], "salary": [50000, 60000, 70000] } table = pa.Table.from_pydict(data) # Write to Parquet pq.write_table(table, filename) import pyarrow.parquet as pq import pyarrow as pa filename = "data.parquet" # Create a table with columnar storage data = { "name": ["John", "Alice", "Bob"], "age": [30, 25, 40], "salary": [50000, 60000, 70000] } table = pa.Table.from_pydict(data) # Write to Parquet pq.write_table(table, filename)](https://substackcdn.com/image/fetch/$s_!bcw-!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F33a99e2a-af26-4a45-b030-4187cf4a3be6_2080x1764.png)

![{ "type": "record", "name": "Employee", "fields": [ {"name": "name", "type": "string"}, {"name": "age", "type": "int"}, {"name": "salary", "type": "float"} ] } { "type": "record", "name": "Employee", "fields": [ {"name": "name", "type": "string"}, {"name": "age", "type": "int"}, {"name": "salary", "type": "float"} ] }](https://substackcdn.com/image/fetch/$s_!Iznb!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F24798cdb-3adc-4e95-b341-7a82f622408b_2080x1224.png)

![import avro.schema import avro.datafile import avro.io filename = "data.avro" # Define AVRO schema schema_str = """{ "type": "record", "name": "Employee", "fields": [ {"name": "name", "type": "string"}, {"name": "age", "type": "int"}, {"name": "salary", "type": "float"} ] }""" schema = avro.schema.parse(schema_str) # Create an AVRO file with open(filename, "wb") as out_file: writer = avro.datafile.DataFileWriter( out_file, avro.io.DatumWriter(), schema ) writer.append({ "name": "John", "age": 30, "salary": 50000.0 }) writer.close() import avro.schema import avro.datafile import avro.io filename = "data.avro" # Define AVRO schema schema_str = """{ "type": "record", "name": "Employee", "fields": [ {"name": "name", "type": "string"}, {"name": "age", "type": "int"}, {"name": "salary", "type": "float"} ] }""" schema = avro.schema.parse(schema_str) # Create an AVRO file with open(filename, "wb") as out_file: writer = avro.datafile.DataFileWriter( out_file, avro.io.DatumWriter(), schema ) writer.append({ "name": "John", "age": 30, "salary": 50000.0 }) writer.close()](https://substackcdn.com/image/fetch/$s_!o3tj!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F674841b4-3cdb-4e1e-acd9-dacfa206813b_2080x3204.png)