The Harsh Reality About The Open-Source Data Stack

Should you build or buy your tooling, and why?

We embraced many of the best software engineering practices. The modern data stack borrowed a popular technique from the software world - building open-source tooling and charging for services.

This is a perfect strategy to find both users and investors.

While investors’ interest is usually a good thing, I believe their appetite for data tooling actually serves poorly for the community.

Here’s what we are discussing this week:

The News

Accessibility

Bad Business Model

The Good Example

The Crossroad

Reading time: 7 minutes

The News

Last week, Talend, a pivotal player in the open-source data stack world, discontinued their Open Studio. You can read the details here. But let's dissect what this means for the broader community.

Talend is a massive company in the data space. Although I wouldn’t concider Talend Open Studio as a part of the modern data stack movement, the company does have some involvement in the area.

Aside from the recently killed Open Studio and the enterprise-grade platform, they also own Stitch — one of the first no-code ELT (Extract Transform Load) services I have ever used.

Stitch is a true pioneer in the data ingestion area. The company didn't just make the ELT process extremely accessible. It opens sourced the specification it uses to transfer data from any given source to any given destination.

Now, I have to say, Singer is really why I'm not surprised by the move against Talend Open Studio. I mean, Talend almost killed the Signer project, too. With a lack of workforce, supporting all the taps and targets became arduous. There's a lot of interest from the community, but it's just not on Talend's agenda.

Luckily, Wise and, even more, Meltano Labs came to the rescue. They didn't just keep the Singer project afloat. They infused new life into it with innovative components and an entirely new Singer SDK. And that's the beauty of open-source. If a project is worth it, there will be somebody who will raise it from the ashes.

Unfortunately, it's not all flowers and roses. In fact, open-source data tools have many issues. Let's discuss a couple of those.

Accessibility

Now, as I mentioned, Meltano, let's talk about it. As much as I admire the project, it looks like it could be more popular in the data community. And it's not just Meltano. There are countless open-source, self-hosted tools teetering on the brink of greatness, yearning for more attention and care.

But here's the catch – the infrastructure is often the Achilles' heel. Starting is a breeze, but maintaining these tools is a different ball game. You can easily pick Meltano's Getting Started guide and get hyped about the tool. But when it comes to deploying it to the cloud, you'd feel a real pain.

It's relatively straightforward to deploy it once on a single EC2 instance. But running it in a scalable way on a Kubernetes cluster with a single pod per job is like scaling Everest. Heck, it's hard enough to have a proper CI/CD process.

Data teams are unwilling to spend that much time, money, and effort supporting something with such a low return on investment. Although I love working on data infrastructure, I agree with those teams.

Handling business requests is hard enough. Understanding complexity, modelling processes, and presenting reports is hard enough. On top of that, you need to track what data is being used, who has access to what, support new projects, advise stakeholders and do hundreds of other things.

The last thing you want to do is spend a week upgrading and fixing dependencies just because you need a snazzy new feature.

And let's face it, most open-source tools are the bread and butter of a company. So, inevitably, features get clipped for monetisation. And while it's understandable – after all, these creators deserve compensation for their hard work – it does raise questions about the sustainability and future of free, open-source tools.

Bad Business Model

Let's delve into the business model of most open-source modern data stack (MDS) tools. They're often designed to attract users who might later pay for cloud offerings.

Remember our chat about dbt a few weeks back?

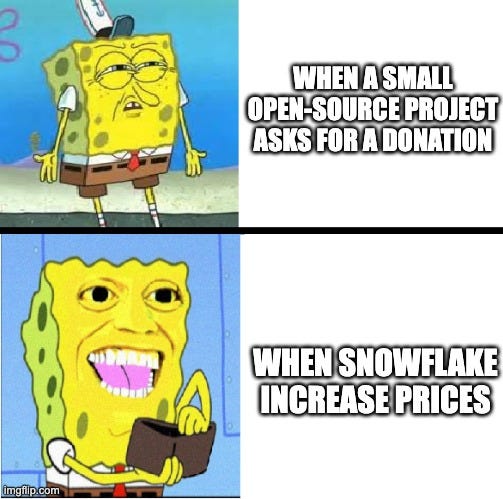

It's a similar scenario with open-source data tools I know of. We, the users, become unwitting victims of this model. We start with the tool, excited by its potential and the freedom it offers, only to find ourselves in a bind when the company shifts its focus to monetising its cloud version, often at the expense of the open-source variant.

And what about Talend?

They didn't just shift focus. They pulled the plug on their free offering entirely.

I think the MDS tooling suffers as well. These companies want to show the world what they have built too early. They want to bring some hype and find early adopters. This might work initially. However, large customers have specific needs, and usually, these tools don't have the features their larger prospects have. So, the client checks the next tool and never gets back.

But there's a glimmer of hope. You can have a successful open-source project in the data space, even if it's a part of your commercial offering. Yet you still need a lot more. A great example of that is Spark by Databrick. While Spark is in the heart of Databricks, there are a ton of more components that make Databricks what it is.

The Good Example

We indeed have some good examples of open-source yet commercial tools. But what we need is a paradigm shift.

We need something closer to the Ruby on Rails model. In case you're unfamiliar, Ruby on Rails is an open-source web development framework for the Ruby language.

Its creator, DHH, extracted Rails from the Basecamp project management tool codebase to simplify web app development. He never intended to monetise it. He just wanted to share something incredibly user-friendly.

And because of its accessibility, it amassed a large user base, translating to more testers and maintainers. If you have ever worked with Rails, you know what I mean. It's not just easy to get started. It's also straightforward to run it on production.

On top of its accessibility and large user base, Rails is just complete. It had everything you need to build a project from its very first release.

And the most significant bit?

Rails is backed and used by large enough companies interested in improving the project.

So, if we want a decent open-source tool with enough freedom and a stable future in the MDS, we need a tool that's easy to use, no matter the scale. This tool should not be the primary source of income for a company. It should be a labour of love, like Hudi from Uber or DataHub from LinkedIn.

The Crossroad

Here's the harsh truth:

I don't think we have a solid data integration tool in the open-source data world.

Current data integration tools are cumbersome to set up, and it almost seems intentional. You're facing two choices:

Self-host your entire DataOps platform. That means you need to handle everything: deployment, upgrades, CI/CD, scalability and much more. That's fun but requires a team that excels in infrastructure and coding. You also need to be willing to invest time and nerves.

Invest in ready-made tools and enjoy peace of mind. On the other side, you lose any flexibility. Not to mention, you are locked to whatever your vendor decides to do with the pricing.

Honestly, for most data teams I know, the second option is the way to go.

The hidden costs of maintaining (often buggy) infrastructure and processes usually outweigh the benefits of direct cost savings.

Don't get me wrong. I love open-source software. In fact, I am a part of a non-profit that organises free events dedicated to open-source software and hardware. We just had the 10th issue of our annual conference!

However, acknowledging these challenges is crucial for anyone involved in the decision process when it comes to adopting new tools.

What’s your take? Hit Reply and tell me what you think.