Playbook: Data stack cost modelling - saving money and improving data culture

A story from Dext.

This newsletter is brought to you by the team at Count. Count is a data whiteboard making analytics and data modeling collaborative, transparent, and trusted. We’re running a live demo of Count next week with Co-founder & CEO Oliver Hughes. RSVP here.

[Quick recap] Playbooks are where we showcase the best of what data teams are doing that you can implement quickly, and see big impacts. In this playbook, we’ll dive into cost monitoring, specifically how Dext cut its Snowflake spend in half with a few interventions.

What I love about this particular playbook is that it is not your average cost-savings story. When I’ve been involved in cost-savings exercises, someone usually angrily tells me to stop doing some activity or using some tool, and I get defensive. Yordan has used a cost-savings goal to do the opposite: to repair relationships, and to help people have a deeper understanding of the company’s data.

Last week Yordan presented his story in a virtual coffee we hosted, and you can hear the recording here:

Background

Yordan Ivanov is the Head of Data and Analytics Engineering at Dext, a Series C cloud accounting platform. Dext has over 500 employees globally, and a data team of 15. Yordan and his team of 2 are in charge of the back end of the stack.

Stack: Snowflake, dbt, Looker, Meltano, and Hightouch

The problem

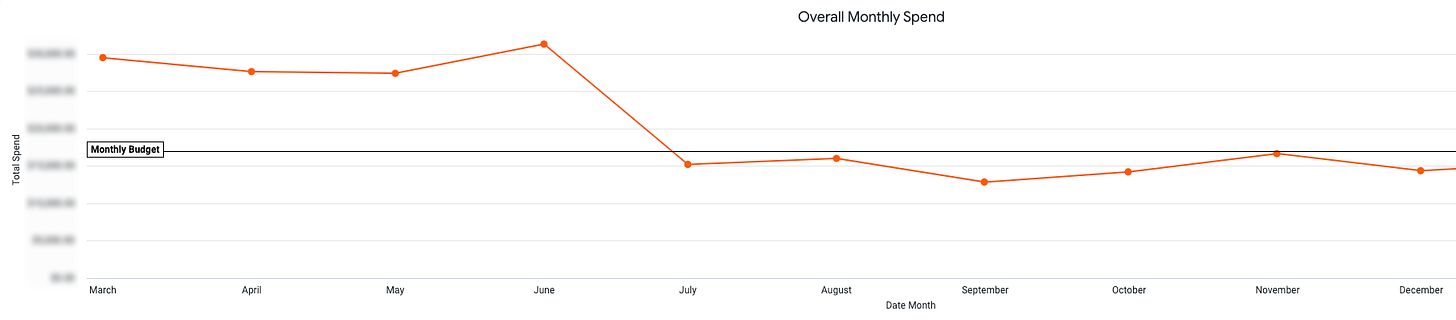

Last summer Yordan was reviewing the company’s Snowflake costs and realized they were in trouble. They were due to use 24 months of budget in just over 12 months at the rate they were spending.

“What we needed to do was identify where we spent the most money. We knew broadly where our money was going but we didn’t know exactly where. This is where we had to start.” - Yordan

The approach

Target 1: Report freshness

The first target on Yordan’s list was report freshness.

“We had so many reports updating seven days a week when we really didn’t need them to be. We only work 5 days a week, and many were looked at less frequently.”

So Yordan went to the stakeholders of these reports and asked them to give up some of their freshness. Perhaps unsurprisingly, there was some pushback.

“As you can imagine, we faced some challenges with that. People were not willing to give that up.”

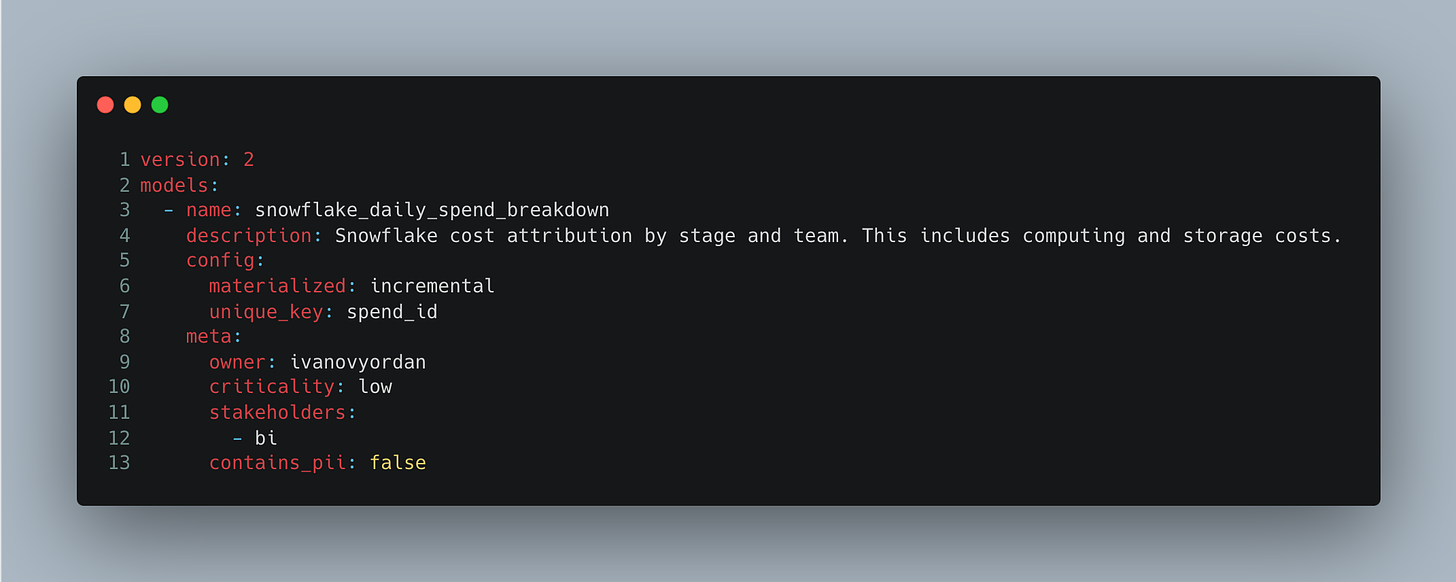

To prove that this change was needed, Yordan implemented Select.dev - a dbt package that let him tag his dbt models with near-infinite granularity. He and his team tagged every single one of their dbt models with things like the stakeholders, the reports it fed, the criticality, etc. The result was that he could now quantify the exact cost of each of those report refreshes.

“Now I could go back to each stakeholder who wasn’t willing to give up freshness and say ‘Here’s exactly how much that freshness costs. You either need to help us pay for that cost, or be willing to reduce the refresh frequency.’”

This proved very effective. Some stakeholders insisted it was worth the cost to keep their refresh frequency, but the majority were willing to go to less frequent updates.

Target 2: Changing dbt habits

With robust tagging in place, Yordan found other sources of runaway costs, and a major culprit was the dbt CI/CD process.

“They literally pushed every single piece of code and waited for the CI checks to run but those are quite heavy. We have multiple refreshes that run each time that process kicks off. We work with production data from time to time too which is slow and expensive. We really needed to change this mindset.”

Yet again, Yordan faced pushback when confronting dbt users. The default CI/CD process was easy and they were comfortable with it.

“Most people don’t think about all the processes that happen behind the scenes. We had to educate them, train them in what is actually happening when they open a PR. Loads of training was required, but eventually people were happy to have a better understanding of how it all works and they went to a more localized testing environment.”

Target 3: Going back to manual model re-runs

The last place Yordan sought to make a big difference was in the large full-model refreshes. These were some of the biggest sources of Snowflake spend, and he was eager to cut back as much as he could.

“Instead of those big models refeshing daily or multiple times a day we came up with a schedule. On specific days each month we would fully refresh those models. We made that schedule available to everyone in a Slack thread. We also let them request manual refreshes if they needed.”

This change to manual refreshes flew in the face of the agile approach Yordan and his team had been taking with their data models, but one he was happy to make given the savings they were able to realize.

This was about more than cost savings

Each of these cost-reduction activities required heavy involvement with others both in the business and in other parts of the data team. As such, Yordan noticed a large shift in the relationships and more broadly the company culture around data.

“Culturally, it was a big benefit. With refresh schedules we had fewer surprises for our stakeholders and they felt more involved in the process. All of our dbt users had a much deeper understanding of what was really going on, and there was a greater sense of shared ownership of data between us and the business.”

Yordan’s tips for doing this yourself

1. Start with getting visibility into your spend

The first thing you need to do is set up some kind of report or dashboard that tells you what your spend is for the last month or quarter so you can see how you’re doing vs. your budget. Then ideally you should use something like select.dev to start being able to allocate cost to specific models, queries, dashboards, and stakeholders.

2. Make sure people are aware of and accountable for the cost they generate

Most people have no idea how expensive it is to re-run a dashboard or test a new model. You can use this cost allocation to start to educate people about how their behaviors affect the backend, and how much that costs. This is how you start to create a shared sense of ownership over the data itself.

3. It’s about controlling cost rather than saving cost

Often, cost-cutting conversations become about punishing people for writing inefficient queries, and while there may be some savings there, Yordan advises thinking of it more about controlling cost rather than saving. Writing more efficient queries is a way to save cost, but not necessarily a good control mechanism. Gating refreshing, for example, is a much better control mechanism.

Some additional reflections

Anything can be a way to improve culture. Even something as seemingly punitive as cost-savings can be re-orientated to bring people together and make a positive impact on people. One Head of Data I spoke to put it well:

“Some people get frustrated that no one asks them to build a decision tree. Well, if you think their request would be better served with a decision tree than a dashboard, then make a decision tree! The opportunity is already there, you just have to look at it differently.”

Data tool pricing structures can suck. Every company has been raising its prices over the last few years, but there also seems to be a trend of companies, maybe even particularly data companies, obfuscating pricing, making it hard for customers to understand let alone manage the amount they pay. As a member of a data company, maybe I take this onboard more than most.

What’s next?

We have a few more playbooks coming up over the next few weeks, some experimentation with more audio, and a big deep dive into data team structures in the works.

This newsletter is brought to you by the team at Count. Count is a data whiteboard making analytics and data modeling collaborative, transparent, and trusted. We’re running a live demo of Count next week with Co-founder & CEO Oliver Hughes. RSVP here.