6 Proven Steps to Build a Data Platform Without Breaking The Bank

Learn how to use the power of the modern data stack for a divine analytics experience

And it's a wrap!

This is the last issue of Data Gibberish for 2023. I'll take an extended break, and the next newsletter article will hit your inbox on January 10, 2024.

We had a blast this year. I started posting consistently just a couple of months ago. The community grew from 0 to more than 200 subscribers, which surpassed my expectations!

Many of you reached out on Substack and LinkedIn, and I enjoyed that! Please keep getting in touch, as I love talking to friendly and curious people like you.

I hope you’ll take a nice break, too, and I'll chat with you in the next one.

You are starting a new data platform. You've checked out some big, all-in-one tools that promise more than you could imagine. But these tools are too expensive, and you want to avoid locking yourself in with a particular vendor.

Throughout your research, you stumbled upon the Modern Data Stack (MDS) concept. You like the flexibility and costs but need help figuring out what you need, as the tooling is too scattered.

I'll show you how to start a new data platform using the modern data stack in today's issue. Moreover, you'll learn how to do it quickly and iteratively without breaking the bank.

Here's the breakdown:

The Requirements

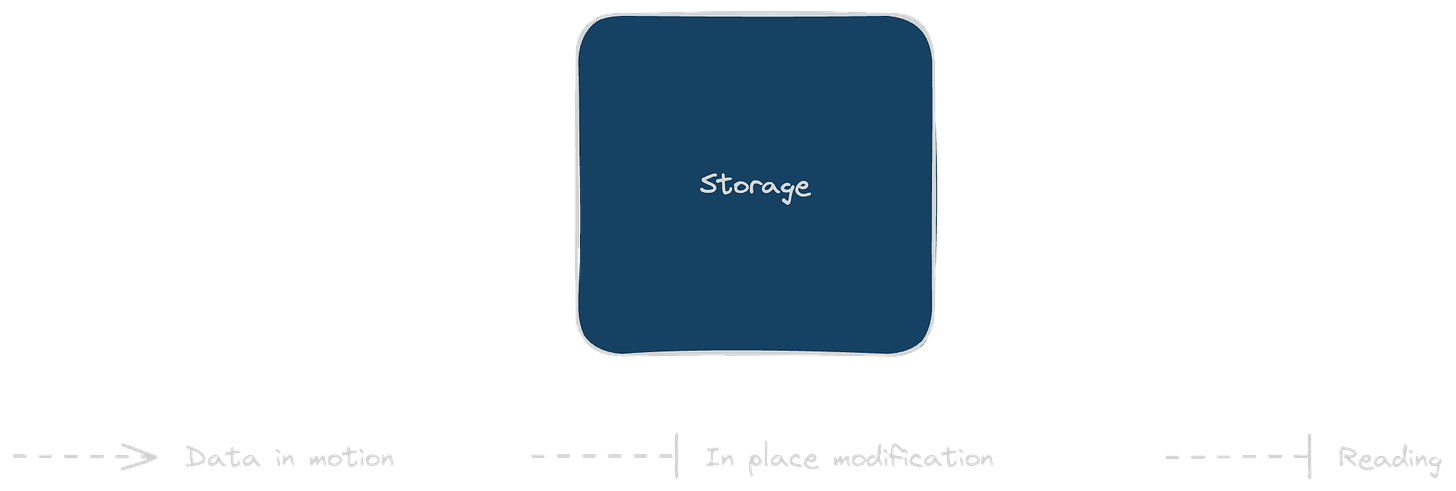

Storage

Ingestion

Transformation

Visualisation

Observability

Reverse ETL

The Minimum

Reading time: 8 minutes

The Requirements

My recommendation is to start with collecting some basic requirements. Answer questions like:

What metrics do I need to report first?

Where does the data I need live?

How long can I wait before refreshing my reports?

Your stakeholders will likely tell you they want all the data and need it in real-time. Some may even request predictive analytics and GenAI capabilities. But that's rarely what they need, especially in the beginning.

Throw these requests in the bin. Discuss the importance of the short go-to-market (GTM) time with your stakeholders. Identify one or two impactful metrics you can compile from just a few sources. Opt for weekly updates.

Now for the other part of the story. Start with platform requirements:

Where do you host it?

What is your budget?

How much of it should you build or buy?

Go with cloud solutions. If possible, prioritise tools with free tiers. Do not spend money on something you may not need at all. Use as many prebuilt tools as possible. Reduce DIY work as much as possible.

Open-source tools are cool, but you want to start quickly and deliver soon. Refrain from spending time provisioning your infrastructure before understanding what you need. You can always change pieces of the puzzle at a later stage.

Now, let's discuss what components and tools you need for your data platform.

Storage

This is where you'll load all the data you have. This is also the back end for your dashboards. Think of it as the database for your data platform app.

Choose a simple warehousing solution that does not require too much maintenance. Ideally, you should be able to upload a CSV and query the database using SQL.

In my experience, datalake solutions are not reasonable when you are still exploring your needs. They are too complex, need too much maintenance, and have too many traits you may not need initially.

Redshift is a good choice for a data warehouse because it's cheap. However, it scales poorly and requires more attention than other cloud-native solutions.

Snowflake is an excellent cloud-based data warehouse. It's scalable, performs well, and integrates seamlessly with other tools. If you know me, you know it's my favourite data warehouse.

Another good option is BigQuery if you're on Google Cloud Platform (GCP). BigQuery has a more generous free tier than Snowflake, but it comes from Google…

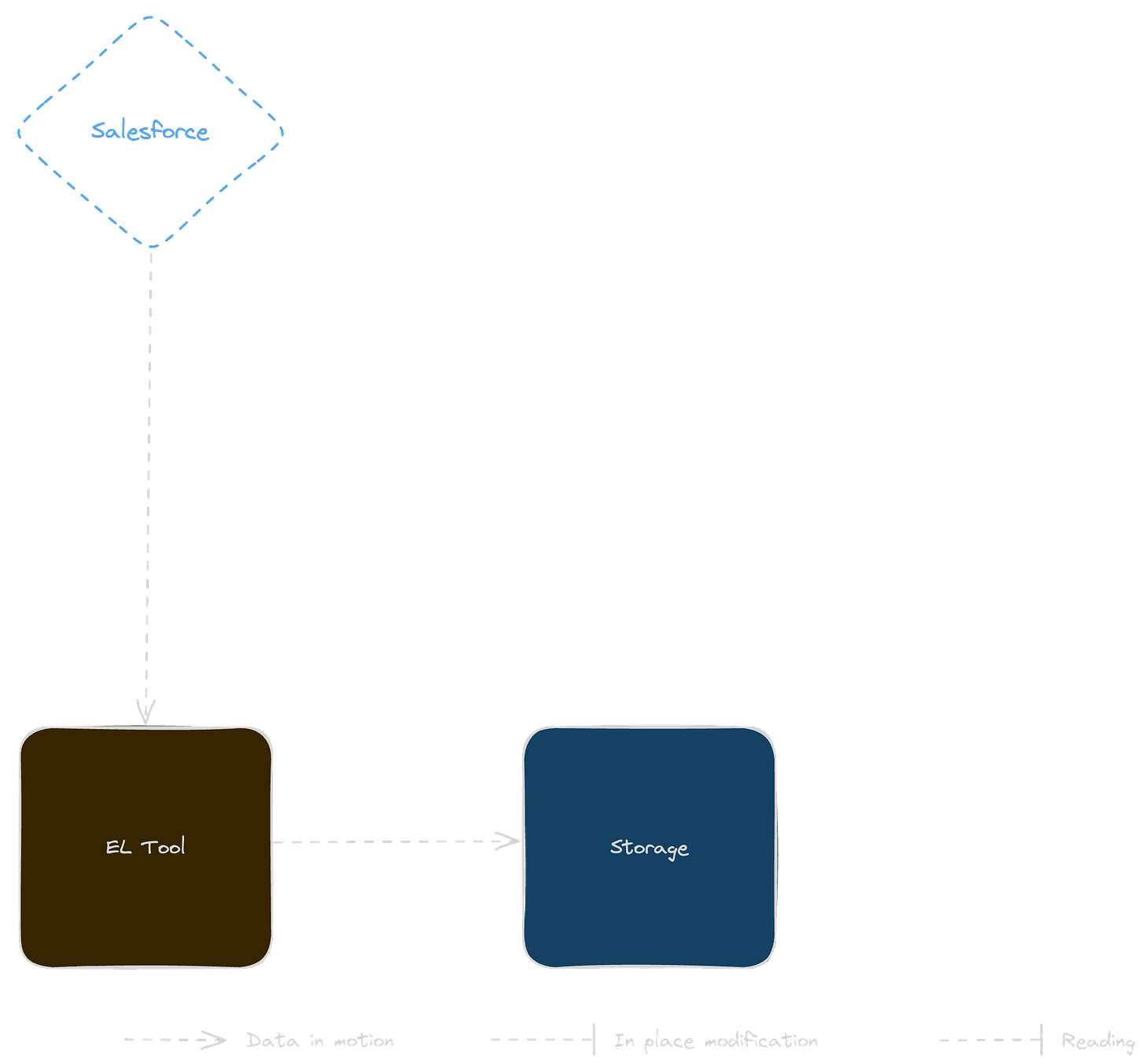

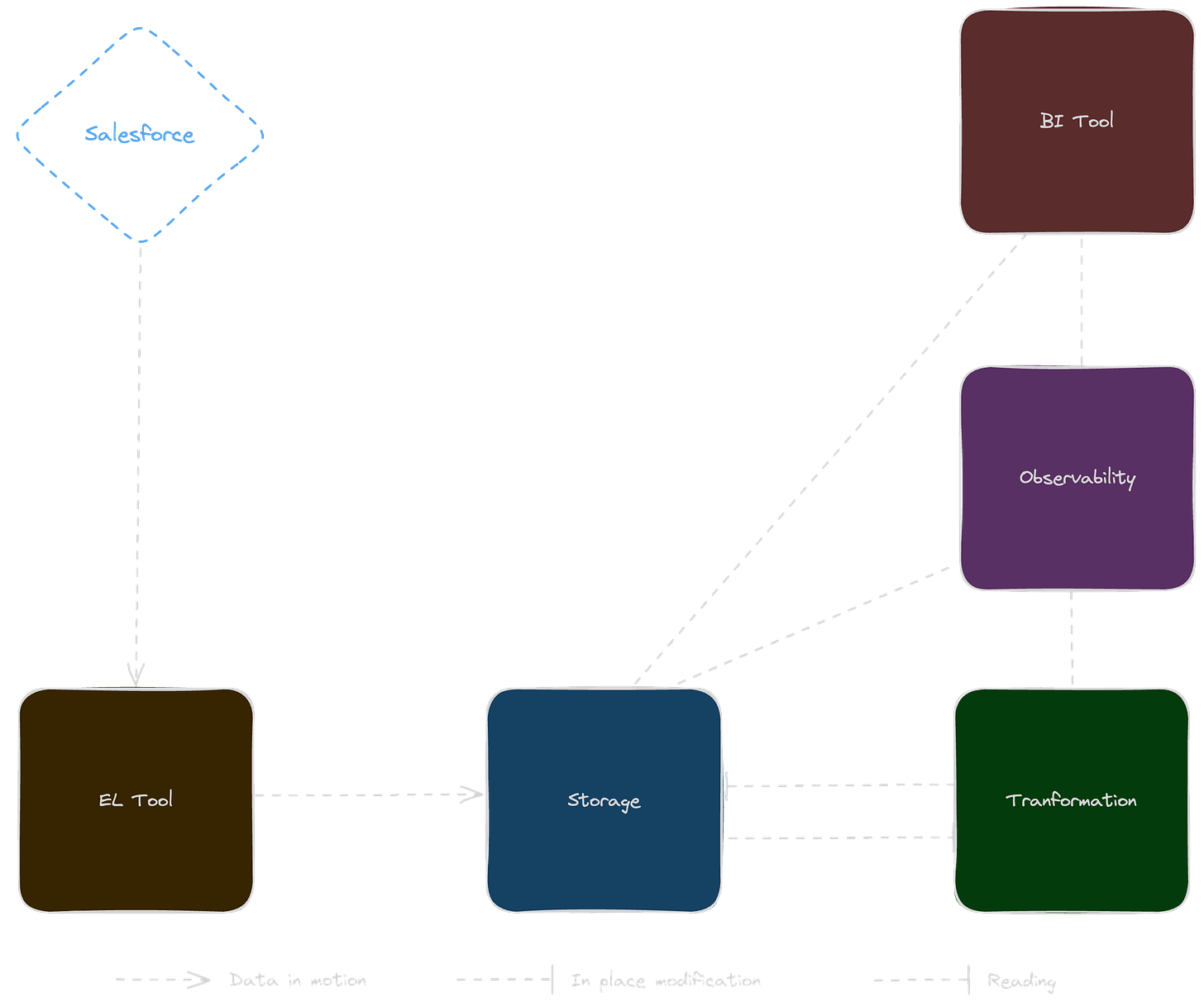

Ingestion (EL)

Right, you know which warehouse to use, but you need a way to input some data.

It usually resides in your application's database or services like Salesforce and Hubspot. You must extract it and load it into the data warehouse.

You can dump CSV files (I've done that), but that doesn't work in the long run. You need an automated tool.

Again, I don't recommend going with a complicated solution.

Scaling Spark with EMR is just terrible. Not to mention how hard it is to find the people who can support that complex infrastructure and code.

If you follow me on LinkedIn, you know how much I like Singer and Meltano, but I wouldn't recommend that either. It may take you weeks to deploy Meltano in a Kubernetes environment.

I suggest going with Fivetran. It has an excellent free tier for starters and supports the most popular sources.

Another honourable mention is Stitch.

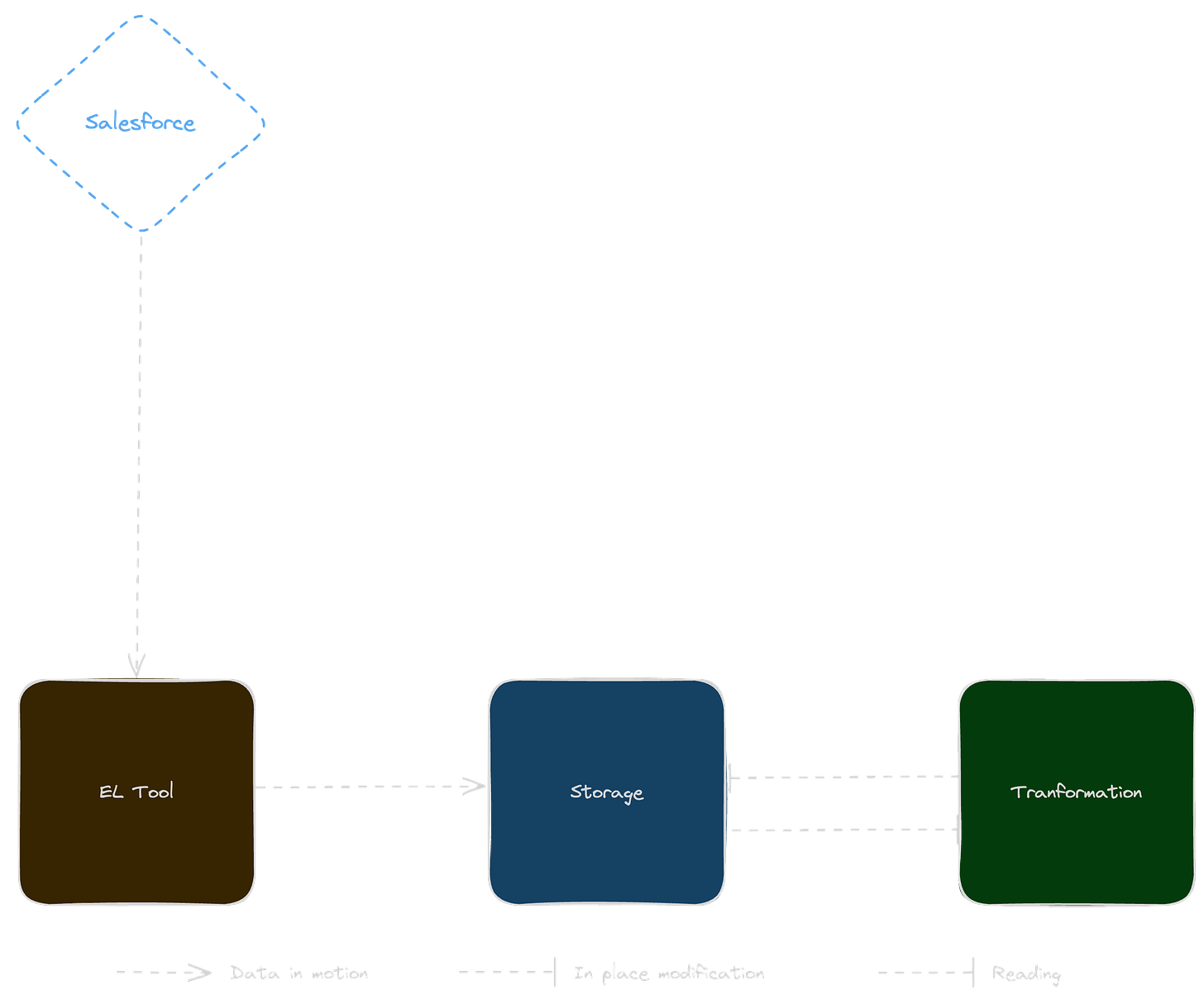

Transformation

So, you have all the data from your sources and can see everything in one place.

Unfortunately, this data is too application-oriented and hard to understand for business stakeholders.

How do you combine the information about an account if it lives on three different systems?

How do you make sure your peers measure churn correctly?

That's simple. Just use dbt!

dbt has everything you need to model the complexity of your organisation.

Don't try to self-host it. dbt Cloud is fantastic if you are just starting. It has a text editor, orchestrator, CI/CD, and many more.

There are some alternatives, of course. Nothing comes close to dbt's popularity and community.

But wait — there's more!

Businesses are usually complex. That complexity will reflect in your modelling.

Unfortunately, an AI that can do all the modelling for you still doesn't exist. You'll need to write a lot of SQL, so prepare to spend most of your time doing that from now on.

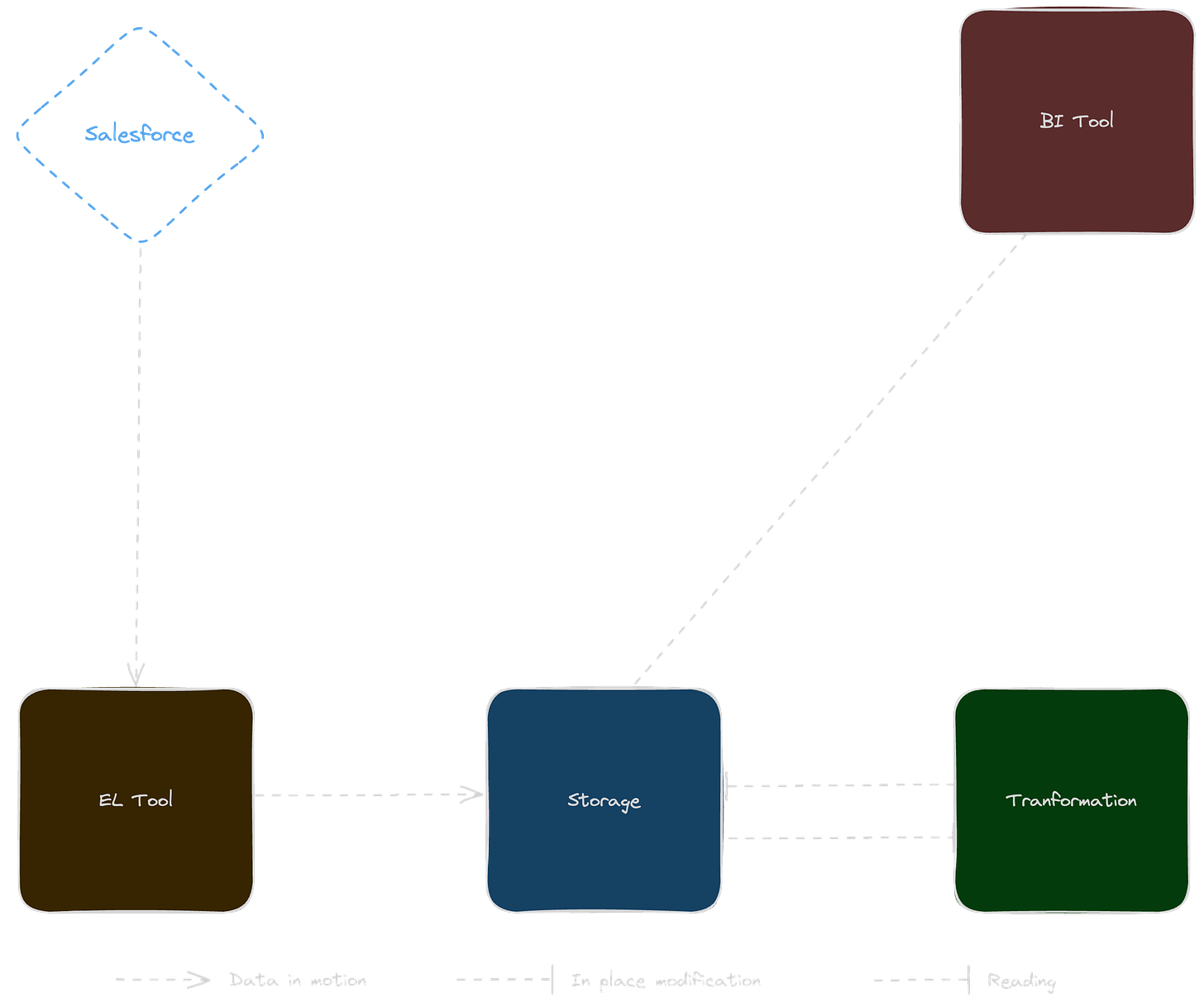

Visualisation

Congrats! You went through all the hoops, from pulling data to modelling it into a place that's your single source of truth.

That's huge!

Now, you have to show these sweet insights to the rest of the business.

Instead of asking them to run complex queries and pray, you need to give them a visual and easy-to-use BI tool.

I recommend using Lightdash. It only has a free version if you self-host it, but it is among the cheapest options on the market.

The most significant benefit of Lightdash is its integration with dbt. You build your tables and metrics in dbt, which automatically appears in Lightdash.

The good news is this space is rich in nice visualisation tools. Some alternatives are Metabase, Omni, and Power BI.

Each one of them has its pros and cons. You can switch later, but keep in mind your stakeholders will need some time to get used to the new tool.

Observability

Do you know how to lose trust quickly?

By providing broken metrics and relying on your stakeholders to tell you about those.

If you are just starting, dbt tests may be enough for you. Yet, I strongly suggest investing in observability early.

These tools can watch dbt tests and check freshness. They can also notify you when something is off but don't cause your pipelines to fail.

Synq is my favourite tool in that space. It has a full view from ingestion to the dashboard, but Elementary is a good option, too.

Again, there are plenty of tools here. Keep it simple rather than opting for something that would cost half of your budget.

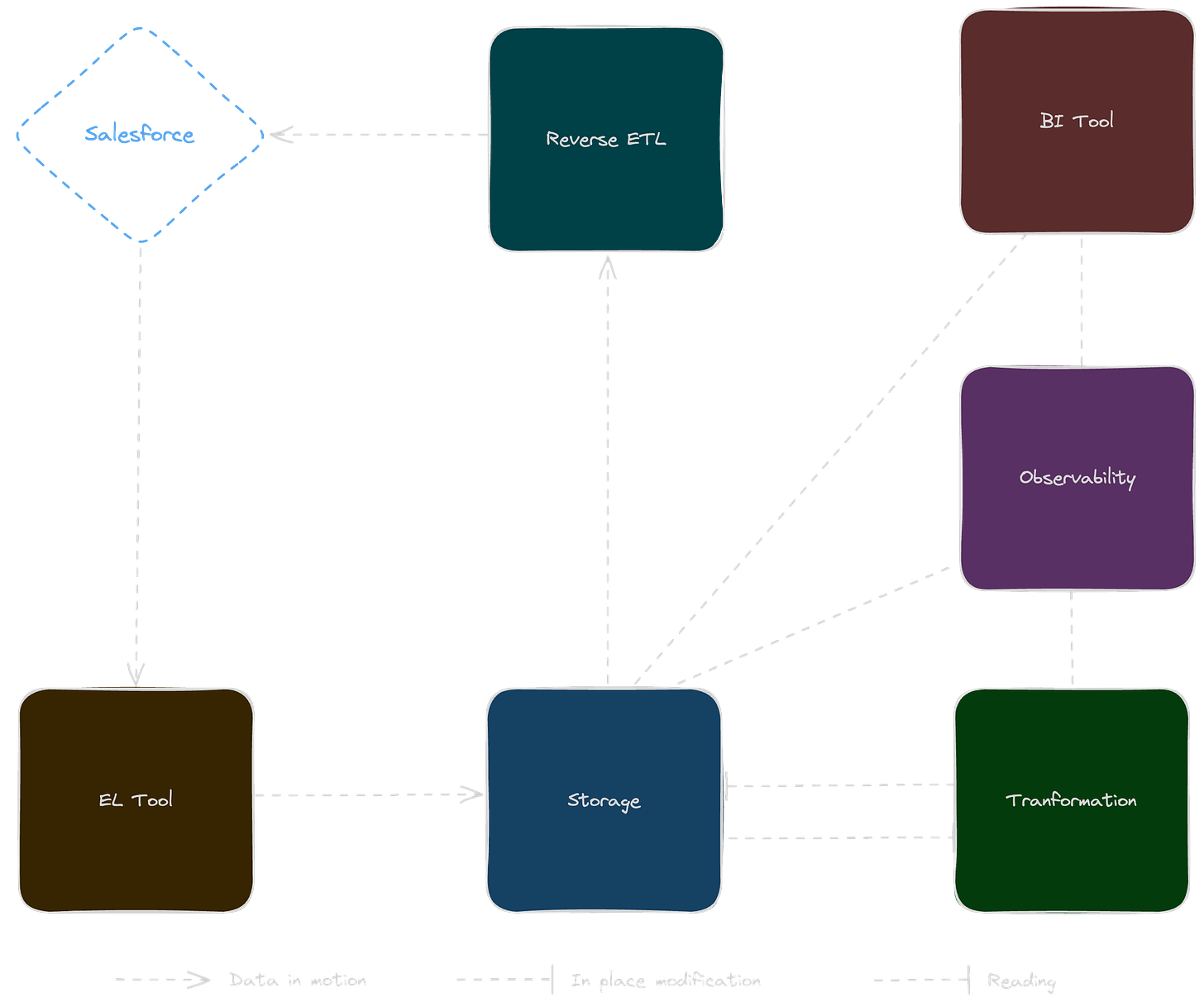

Reverse ETL

People will want you to push data to Salesforce if you do your job well. And it makes sense. You don't want stakeholders to juggle tools to find their customers’ information.

You may not need this on day one, but you will need it on day two or as soon as your sales team finds out what you have done.

Hightouch is my favourite way to enrich objects in cloud services. It has a free tier and integrates exceptionally well with dbt. It's just brilliant.

Alternatively, you could use Census, which is also a great tool. Sometimes, you can even use your EL tool for a few extra bucks.

The Minimum

We discussed six groups of tools for building a robust modern data stack platform. Once you have that in place, you will be good for years.

I recommend reporting when you set up a warehouse and an ingestion tool. Once you have loaded your data, you can schedule snippets of SQL to run weekly. Then, you can dump the results into CSV files and create beautiful dashboards with Excel.

In fact, no matter how good your data platform is, you'll need to dump data into Excel. Take that as an axiom.

Here's the correct order of tooling to implement:

Storage: You need a place to store data;

Ingestion: Start pulling data automatically;

Visualisation: Build slow but impactful dashboards;

Transformation: Faster and more standardised reporting;

Observability: Make sure you provide correct data;

Reverse ETL: Enrich other tools with data from your single source of truth.

Summary

Navigating the waters of the modern data stack may be a bit overwhelming initially. Today, we took the steps to learn what you need as a starter. What we discussed may serve you well for at least a few years.

I told you what components to start and even recommended some tools.

Here are my recommendations in short:

Storage - Snowflake

Ingestion - Fivetran

Transformation - dbt

Visualisation - Lightdash

Observability - Synq

Reverse ETL - Hightouch

You can start with virtually zero budget with the stack I suggested and increase your costs as you go. But the price is not the most important here.

The modern data stack is modular and scalable. It minimises your go-to-market time without locking you into a single vendor.

That was it. If your plans for the future include building a new data platform, the journey starts now. Follow the roadmap we've laid out, embrace the power of the modern data stack, and turn your aspirations into achievements.

Did you enjoy that piece? Follow me on LinkedIn for daily updates.